Stop operating in the dark — we need continuous, runtime IaaS visibility

It feels like IT and security pros are tasked with the impossible job of operating business-critical applications in Infrastructure as a Service (IaaS) environments in the dark with no ability to monitor and protect them in runtime. You see hype everywhere talking about using vulnerability and configuration scans to protect cloud-native apps and data. DevOps (development and operations) and DevSecOps (development, security and operations) experts are expected to predict what might happen in their IaaS environments pre-runtime and then to operate on daily post-mortem lists of vulnerabilities and risky configurations. Lists that have no resource context and deliver no live monitoring or control over what’s actually happening in their environment.

What happens when you can’t fix a vulnerability or a risky configuration? Maybe there isn’t a vulnerability fix available, maybe your application requires a certain configuration, or maybe you simply don’t have time to get to the hundreds of items on the list because no one has unlimited man-hours. The constant news reports of data breaches, ransomware, crypto-jacking, and distributed denial-of-service (DDoS) attacks make it clear that protecting dynamic cloud systems requires more than predictions and post-mortem lists. We need to know these apps and environments are secure while they are running. How do you make continuous, runtime IaaS visibility and control a reality?

Shine a light on apps running in Microsoft Azure, AWS, GCP, and IBM Cloud

As a security professional tasked with protecting IaaS environments (in Microsoft Azure, AWS, GCP, IBM Cloud) and the data they contain, it can feel like an endless guessing game.

It would be so much better to know at a glance: What microservice is connected to what resource? How is the traffic flowing between my microservices? Is there anything odd about that traffic? Where is my sensitive data stored, and where is it flowing inside my cloud? Are any of my vulnerabilities and risky configurations associated with critical services and sensitive data? Am I under attack right now? Have I been compromised? Can I respond at the moment of attack to protect my resources and keep my applications up and running?

There are basic steps you can take to protect your environment from a traditional VM-based architecture perspective. And your options become even more powerful if you are using Kubernetes, especially if you adopt a service mesh.

Control access, sensitive data, and security posture in runtime

In traditional cloud architecture, you should be actively monitoring and controlling access and activities in your cloud. And you should apply a data-centric lens. After all, a key reason your cloud is at risk is because it contains valuable data.

Classify your data, monitor it, and control access to it. Data storage is handled differently depending on your IaaS platform, but the fundamental requirement to track where sensitive, regulated and confidential data is kept and who or what has access to it remains the same. In Microsoft Azure that would be file, blob, and queue storage. In Google Cloud Platform (GCP), it is standard, nearline, coldline and archive. For IBM Cloud, you have smart, standard, vault and cold vault. And, of course, S3 in Amazon Web Services (AWS). And it isn’t just object storage, remember to extend this monitoring and control to your databases as well.

Set policies to automatically detect if you have public or external access links to personally identifiable information (PII), payment card data (PCI), or other highly confidential data. Investigate and remove those links. This seems so basic, but most organizations are not doing this. We regularly see news reports of data exposures discovered in cloud environments that have existed for a year or more.

Track user and account behavior and take action to block high-risk activity. For example, you can put guardrails around your environment to whitelist or blacklist access by country or IP range. Detect high-risk user and account behavior like multiple failed logins, impossible locations, abnormal system changes, abnormal encryption or deletion of files, and abnormal requests and set policies to respond by alerting you, locking out those users, or challenging them with multi-factor authentication (MFA).

Monitor network flow, sensitive data, and security posture between and in microservices

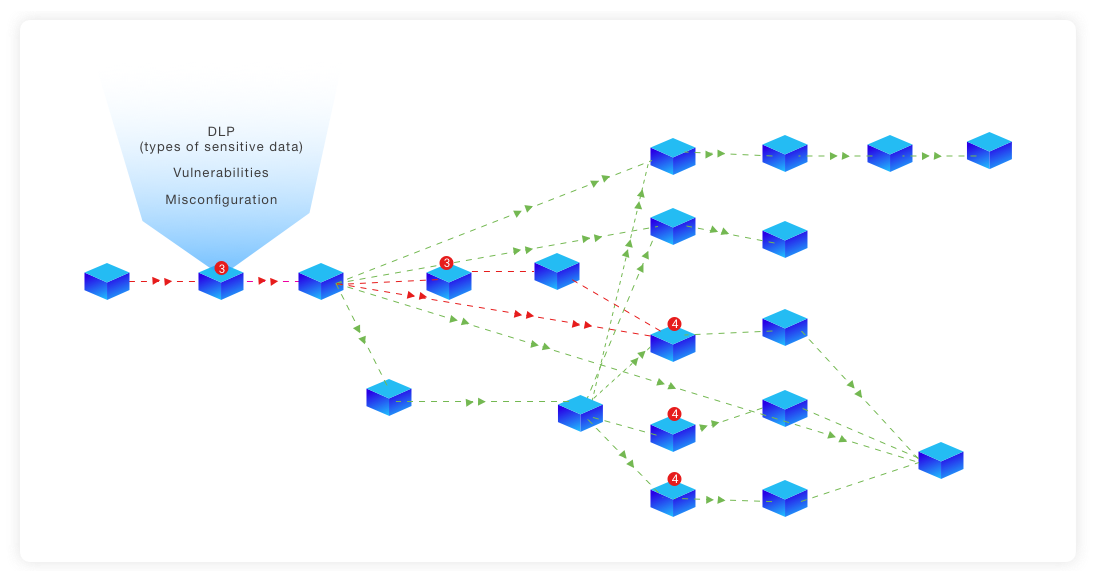

It would be really useful to have a unified runtime view of your cloud-native application environment that showed the state of traffic flow through your connected microservices with the security posture of those services and if there is any sensitive data stored or flowing through the system right now as it is operating.

It would look something like this:

Here’s a concept unified security view of a running application environment using Kubernetes. Image courtesy of Microsec.ai

With visibility like this, you could monitor the microservices and traffic in your environment with the context of security posture and data sensitivity in runtime. This would be a good way to immediately see where security issues are in an application, and key contextual information prioritize what to fix. For example, you would know to prioritize your response to an issue detected in a critical workload like a checkout service because you know it contains sensitive payment card data and drives revenue for your business.

If you can’t fix a vulnerability or change a risky configuration, you should recognize the risk and apply other risk mitigation measures through elevated security policies. You could use user and entity behavior analytics (UEBA), network controls, and access controls to detect and isolate risky resources or block high-risk users and accounts in runtime.

If you have traffic flowing to an unauthorized connection or a DDoS attack, your system should detect it and make it easy to block it without taking the application offline using an easily or even automatically activated network policy. If you have a resource that has been hijacked or compromised with malware, you could use a micro-segmentation approach with east-west network policies to isolate that resource and contain the blast radius of an attack. In the old days, when you had a compromised server, you disconnected it from the network and isolated it to stop the spread of the attack. This is how you would do that in the cloud.

You could add data classification and data loss prevention to track sensitive data flow east-west within your environment and alert you if you have PII, PCI, healthcare, or other confidential data flowing to unauthorized zones, workloads, or application programming interfaces (APIs) in your cloud. And with this data classification, those same useful micro-segmentation network policies could block this risky movement of data.

Runtime IaaS Visibility and Protection

You don’t have to rely solely on lists of vulnerabilities and misconfigurations to protect your cloud applications and the data they contain. Everyone is ignoring the runtime elephant in the room. Cloud environments, applications, and data need runtime visibility and protection that’s data-centric and provides the controls needed to protect applications and data in operation. And since no one has unlimited time, look for options that don’t require installing agents and sidecars. Cloud and Kubernetes APIs are powerful enough that you could do this today. Stop operating in the dark.

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!