Duplicate Alerts Draining Security Analysts' Time

New research shows that 30 percent of analysts' manpower is lost to duplicate alerts.

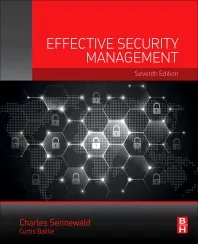

Chart 1: Approximately one-third of alerts were exact duplicates of other ones, meaning that one-third of analysts' time is being spent processing alerts that have unknowingly already been processed. Chart courtesy of Siemplify.

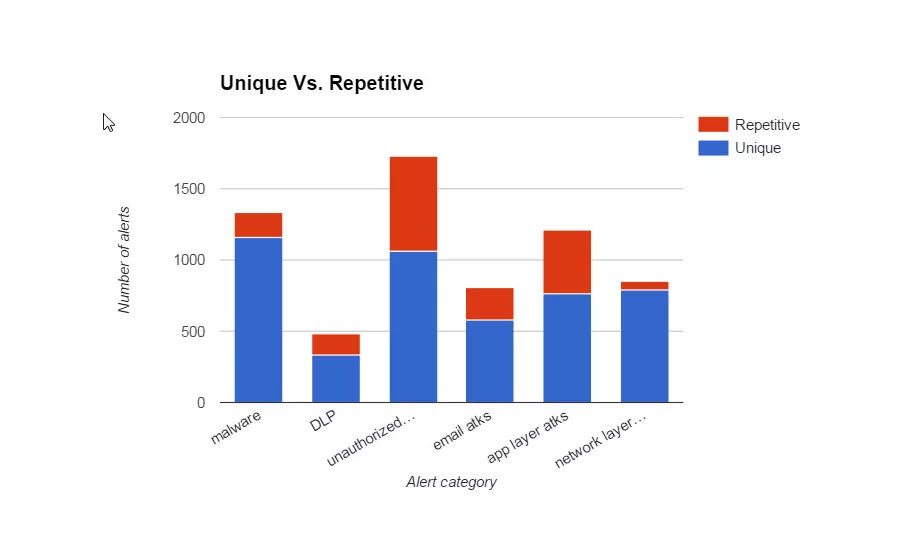

Chart 2: Alerts were divided into three categories: real alerts (true), false positives (FP) and true alerts caused by internal IT or SOC teams (FP*). Looking at the total amount of hours analysts spent processing this data set, we can extrapolate that over 190 hours of work time were spent processing duplicate events, or roughly 15-20 percent of analysts' work time for an entire month. Chart courtesy of Siemplify.

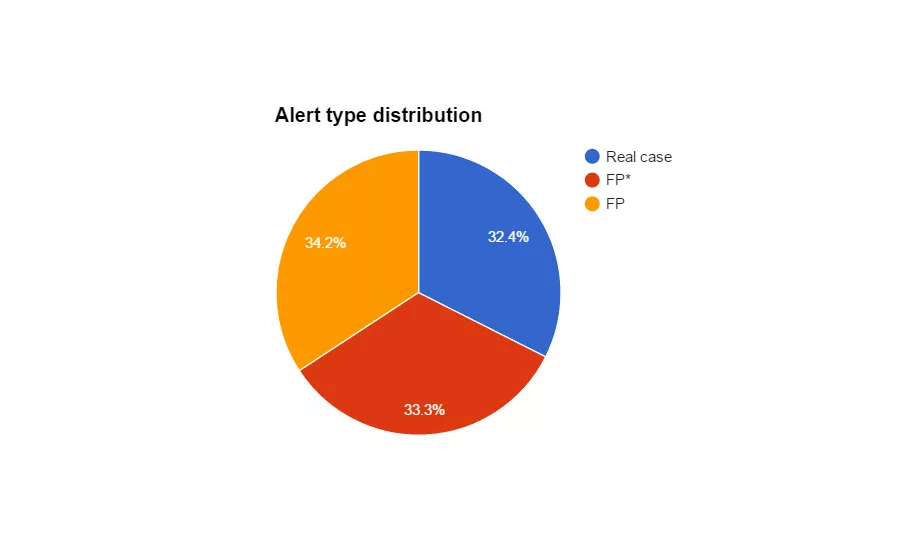

Chart 3: Very often, it is not the same analyst processing the original and duplicate alerts, further slowing down processing time. It stands to reason that a great deal of time and manpower could be saved if the same analyst were to be assigned each duplicate case he or she already solved. Chart courtesy of Siemplify.

As cyber attacks continue to expand in number and severity, many organizations find that they are unable to deal with the threat effectively. To attempt to quell and contain these threats, the modern security operations center (SOC) has become a complicated patchwork of disparate tools, each one designed to target the problem from its own angle. As each additional tool does its job, it creates information that resides in its own silo, leading to an endless stream of alerts to be processed. As these alerts pour in, they flood analysts with excess data, leaving them unable to discern the real threat from the noise, leading to an inability to effectively respond.

Our researchers at Siemplify wanted to understand how many alerts, if any at all, were duplicate alerts stemming from the myriad of tools used and how much time and other resources were being wasted processing these alerts in the typical SOC. Using statistics collected from more than 9,500 alerts (cases) for the period of three months from the SIEM system at a typical Siemplify client, our research team analyzed the data. The discovery is startling.

As we sifted through the data, we found that over a third of alerts were duplicates, the same exact ones that had already been “processed” and dealt with earlier. It is clear that these duplicate alerts are likely one of the major contributing factors in flooding the SOC and creating a backlog impeding analysts’ ability to perform their job effectively.

Addressing this duplication and lack of visibility is mission critical as SOC teams seek to mature and improve the SOC effectiveness.

Definition of “Exact Duplicate” Alerts

Two alerts are considered exact duplicates if they have the same source and destination, if the same product issued the alert and if the name of the alert is the same. These important yardsticks allowed us to conduct our research within tightly controlled parameters.

One Third of Time Spent in Non-Critical Processes

Our analysis showed a repetitive alert rate of 33.7 percent, or approximately third of alerts were exact duplicates of other ones, meaning that by the time these alerts reached analysts, they had already been processed previously. This number essentially means that one third of analysts’ time is being spent on processing alerts that have unknowingly already been processed. (See chart 1 above.)

If it were possible to weed out these duplicates and flag them as unimportant, or if there were a way to allow them through without investigating them thoroughly, the immediate productivity boost is obvious. However, at present SOC teams are left with little ability to make this distinction resulting in massive manpower drain.

Delving into the data further, we divided alerts into three categories: real alerts (true), false positives (FP) and true alerts that are caused by internal IT or SOC teams (FP*).

Each group made up approximately one third of the entire set of alerts examined. Looking at the total amount of hours analysts spent on processing this data set in a team of two analysts per shift work (24/7), our researchers were able to extrapolate that over 190 hours of work time were spent processing these duplicate events, or roughly 15-20 percent of their work time for the entire month. (See chart 2 above.)

Routing of Cases

Our team also came across another interesting observation. As alerts pour in, they are assigned randomly to analysts – and this applies to duplicate alerts as well. Although an incoming alert has already been processed and understood by one analyst, as it comes into the system again, it's handed off to whomever is available, despite the fact that there is someone who has already picked the issue apart. Presumably, had the alert been sent back to the original analyst, he or she would have been able to assess its true nature much faster than an analyst who has never before seen this particular alert.

In chart 3 above, a sample of alerts were analyzed to understand the lack of consistency in regard to assigning duplicate cases back to their original analysts. As seen in the graph, very often it was not the same analyst processing the alert, further slowing down processing time. It stands to reason that a great deal of time and manpower could be saved if the same analyst were to be assigned each duplicate case he or she had already solved.

Lack of End-to-End Visibility Gives Attackers an Advantage

Given the focus on detection over the last decade, SOC teams are inundated with alerts. It’s no surprise that many of these are repetitive observations of the same alert, yet the magnitude and impact to SOC teams is startling and unsustainable. Our research clearly points to the fact that as it functions today, the SOC is sorely lacking in transparency.

To effectively deal with the many issues stemming from the lack of end-to-end visibility, such as the duplicate alerts highlighted in our research, the SOC must evolve from the patchwork of disjointed tools currently employed into one cohesive and unified fabric. All incoming data should be regarded as interwoven parts of a whole and seen as one unfolding storyline. Given the proper framework, and most importantly context, duplicate alerts should be able to be identified and processed swiftly by SOC teams. The stakes are significant:

- Reduction in caseloads;

- Elimination of repetitive work with different analysts tripping over past investigation

- Increase in analyst caseload capacity;

- And perhaps most importantly – driving focus on the real threats to accelerate remediation.

Only then, by providing the needed context and connection across alerts, can analysts mature towards the next-generation SOC. Until this streamlined operation is actualized, non-critical issues will continue to sap analysts of their precious time, effectively putting attackers yet one more step ahead in the ongoing race for integrity and security of corporate data.

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!