Are security teams equipped to handle overexposed data?

Virtually every company with a substantial cloud data environment has some measure of overexposed data. The consequences can range from benign to neutral, annoying or even catastrophic. To make the news, the level and consequences of overexposed data must be spectacular — a word that very few security executives would like to hear used about their operations.

One of the more damaging consequences of overexposed data is a breach that steals, destroys or ransoms sensitive data. A shocking number of breaches start off with cloud storage buckets (e.g. Amazon S3), which for any number of reasons can be full of sensitive data that the information technology (IT) team is unaware of. While there are plenty of solutions for securing sensitive data by location (an individual file server or storage device, for example), that doesn’t account for the tons of sensitive data that is casually shared within an enterprise.

For example, Jessica in Marketing sees an interesting chart in a spreadsheet that her friend, Joe in Accounting, made. He shares the spreadsheet with her, forgetting that the third tab lists the organization’s top 100 customers, complete with their contact and credit card information. Jessica shares it with her team, who then share it a few more times. In such a scenario, that confidential data can travel to many different computers, servers and cloud-based storage locations.

In situations of accidental information sharing, how can IT teams determine whether data is overexposed?

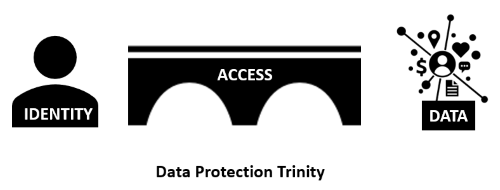

Trinity of data protection: Identity, access and data

Understanding the answer to that question can be boiled down to the trinity of data protection: identity, access and data. First, IT and cybersecurity professionals must understand identity. Then, they must understand identity in the context of the access that individuals, groups or roles have to systems and data. And most critically, security teams must understand the data itself—its value, purpose and sensitivity.

Image courtesy of Coppins and Spirion

Image courtesy of Coppins and Spirion

Looking at a bridge with identity on one side and data on the other, access is the conduit between the two. If identity or access are mismanaged, an organization ends up with overexposed data: the proverbial “bridge to nowhere.” Security leaders must also understand the severity, cost and consequences of that exposure. IT teams don't really care if the lunch menu is overexposed, but they must care if customer credit card information or sales forecasts are leaked.

Cybersecurity teams have long focused on access. But in a sense, they have been missing a lot of information that can lead to a reduction in overexposed data. Without good intelligence about the data they are trying to protect, a potentially damaging blind spot may exist that can be exploited.

In the spreadsheet example above, it doesn’t matter if all the accounting spreadsheets are secured in a single location, such as a file server. If that spreadsheet is emailed and then ends up on a server that the whole company has access to, then that customer list is exposed. So, focusing solely on data location is insufficient.

A key piece is missing: data intelligence.

Obviously, not all data is created equal, and different datasets have different value based on the organization, role, authority and other factors. How can identity and access systems distinguish between high- and low-value data? Who defines what high value data and low value data are?

Understanding the data

Understanding the value of data is critical for any identity and access system in order to identify, prevent and mitigate overexposed data. Otherwise, identity and access management becomes a container for a giant collection of data without the necessary intelligence to be effective.

To create that intelligence, security teams need to assess the relative value of data at the element, file and location level. At the element level, everybody knows that obtaining personal information like a name, date of birth, address or Social Security number can be useful for a bad actor. Cybersecurity solutions must be engineered to recognize that different data elements can be assembled to create a digital picture that can be damaging, if overexposed.

The second part of that data intelligence is knowing where sensitive elements live — including inside PDFs, as images, in MS Office files, emails, messages and databases — anywhere that data might reside. So, understanding the value of data elements and knowing the file types where they live is part of creating that intelligence.

The third pillar is data location: servers, cloud stores, endpoints, backup drives — anywhere data might be stored. IT teams must assume that there is sensitive data in all those locations until they can prove otherwise. But how do you prove that?

Data teams need a data discovery platform that can search for both common and custom data elements. It must search the places were data lives: within both structured and unstructured file types and across endpoints to the cloud. Having such a data discovery mechanism can help security teams understand an organization’s level of overexposed data risk.

The scope of really understanding sensitive data is somewhat overwhelming because today’s data environments are fluid. Files and data are rabbit-like in their ability to replicate in the cloud. On top of that, cybersecurity professionals have the challenge of associating the data elements back to the individual. This is a dynamic, complex computing problem that must be solved with modern tools such as machine learning and predictive analytics.

It is becoming clear that the scope and scale of the problem has grown beyond individuals peering into dashboards. Data security teams need to be constantly interrogating their data environments to search out sensitive files and data elements wherever they live and either suggest or take action. The security industry is only just starting to apply advanced analytics to the problem, which in the end, will require a combination of tools and techniques that span the enterprise. This is only starting to materialize in SecOps.

Data protection is a journey, not a project or product. Start by defining the value of the types of data in the organization. Understand how that valuable data is created or collected and ensure that the firm has policies and controls in place on how that data can be used and by whom. Then begin the process of discovering, classifying and remediating the organization’s data — location by location.

Starting now will help mitigate the risk of ransomware attacks, data breaches and leaked information reaching the dark web.

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!